Last modified: 08 January 2025

Populating historical data to data model tables

About

AEMO provides NEM participants a monthly archive. It consists of a historical subset of the Electricity Data Model data. Below are the prerequisites and four different procedures participants can follow to load historical data to the data model tables.

Prerequisites

On AEMO's Market Data NEMweb, navigate to Data Model > Monthly Archive and download the archived data .

Using the supplied SQL Loader Wrapper script

-

Load the monthly DVD to drive or copy the files to a directory.

-

Unzip the data files.

-

In the target schema, disable the Foreign Key constraints if you have implemented any.

-

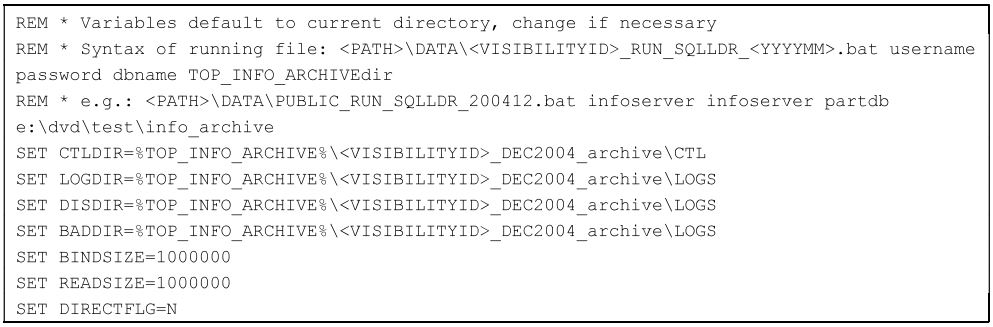

To a directory, save the <VISIBILITYID>_RUN_SQLLDR_<YYYYMM>.bat script with write access and edit it.

-

In the script, locate the following section, make the necessary changes and save the file.

-

On the command line, set the working directory (using CD) to the directory containing the <VISIBILITYID>_RUN_SQLLDR_<YYYYMM>.bat script and run the script.

-

At the location specified by the LOGDIR entry set in the <VISIBILITYID>_RUN_SQLLDR_<YYYYMM>.bat, check the produced logfiles for any errors.

-

In the target schema, enable the Foreign Keys if you disabled any in step 3.

SQL Loader command line

-

Unzip the zipped csv file.

-

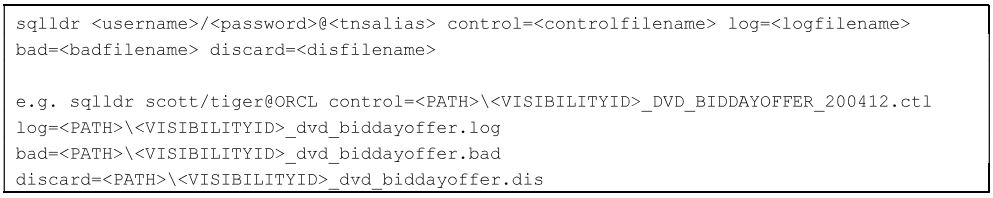

Run the SQL Loader utility as follows:

-

Check the log files for any errors.

-

Check the discard file for any records discarded due to failing the WHEN clause.

Each discard file is expected to have 3 rows beginning with C, I and C respectively - these are comment rows and need to be discarded. Any rows beginning with D are data rows and are expected to load successfully.

-

Check the bad file for any rows not able to be loaded.

Unsuccessful loading of a row is due usually to a column data type mismatch or number of columns mismatch between the data in the csv file and the structure of the table in your target database.

Using the supplied BCP Wrapper scripts

-

Load the monthly DVD to drive or copy the files to a directory.

-

In the target schema, disable the Foreign Key constraints if you have implemented any.

-

In the DATA directory, unzip the csv files.

-

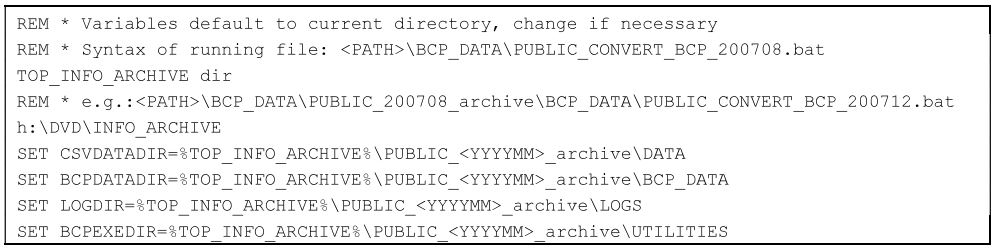

To a directory, save the <VISIBILITYID>_RUN_CONVERT_<YYYYMM>.bat script with write access and edit it.

-

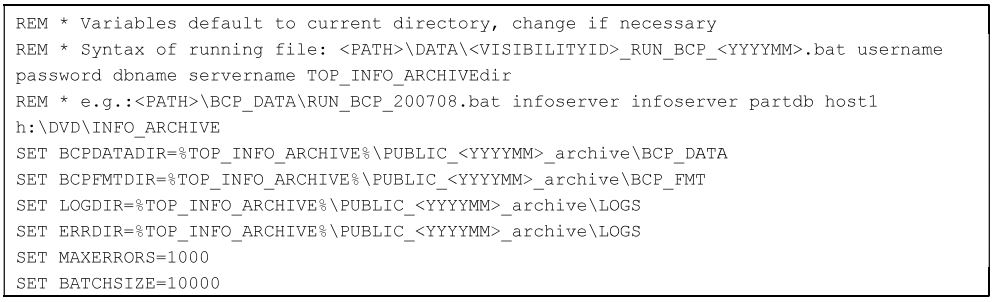

In the script, locate the following section, make the necessary changes and save the file.

-

On the command line, set the working directory (using CD) to the directory containing the <VISIBILITYID>_CONVERT_BCP_<YYYYMM>.bat script and run the script.

-

At the location specified by the LOGDIR entry set in the <VISIBILITYID>_CONVERT_BCP_<YYYYMM>.bat, check the produced logfiles for any file conversion errors.

-

In the script, locate the following section, make the necessary changes and save the file.

-

On the command line, set the working directory (using CD) to the directory containing the <VISIBILITYID>_RUN_BCP_<YYYYMM>.bat script and run the script.

-

At the location specified by the LOGDIR entry set in the <VISIBILITYID>_RUN_BCP_<YYYYMM>.bat, check the produced logfiles for any errors.

-

In the target schema, enable the Foreign Keys if you disabled any in step 2.

BCP command line

-

In the DATA directory, unzip the required csv file.

-

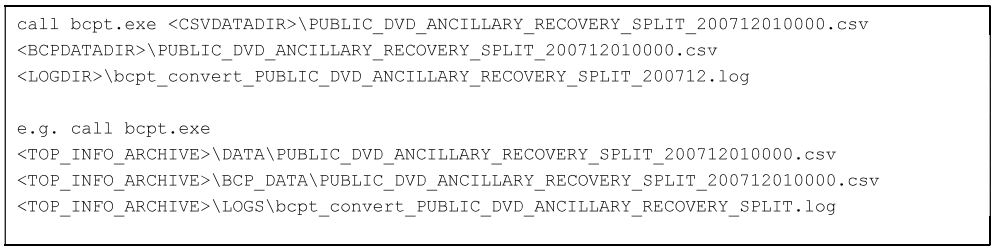

Run the BCPT.EXE file conversion utility as follows:

-

Check the log files and error files for any errors.

-

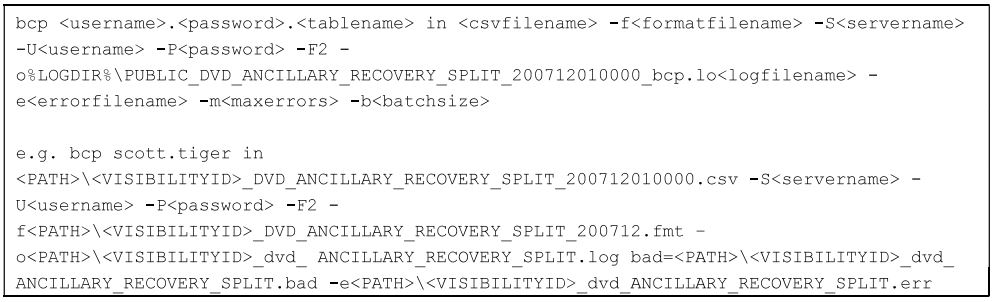

Run the SQL Server BCP utility as follows:

-

Check the log files and error files for any errors.

-

Check the bad file for any rows not able to be loaded.

Unsuccessful loading of a row is due usually to a column data type mismatch or number of columns mismatch between the data in the csv file and the structure of the table in your target database.